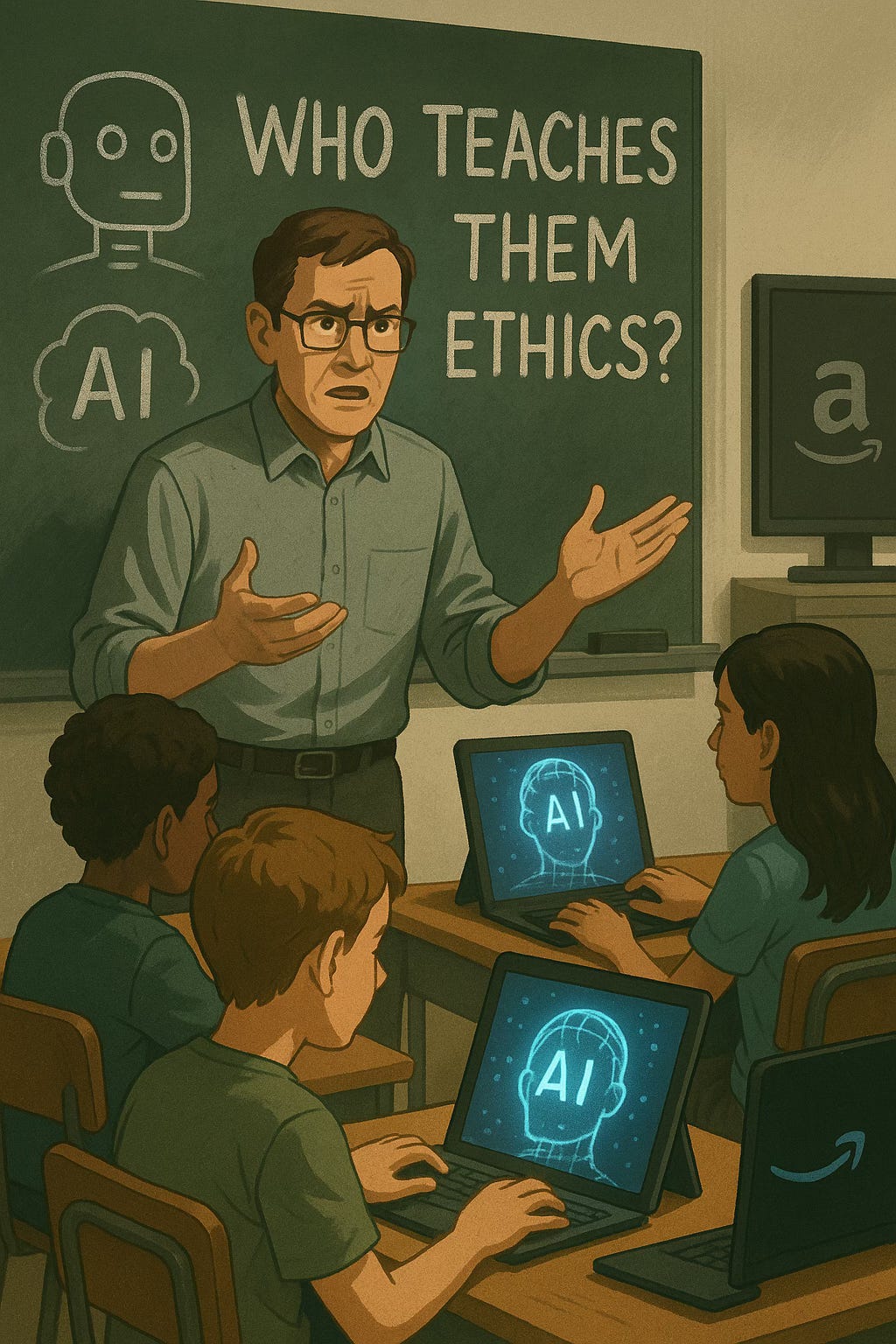

One Million Australian Kids to Learn AI

But Who Teaches Them Ethics?

Australia plans to teach one million children the fundamentals of artificial intelligence within three years. Backed by Amazon Web Services (AWS), the initiative promises to close the country’s digital skills gap and prepare the next generation for an AI‑powered economy.

It sounds like a win for education. But when a private tech giant helps define how children understand AI, the question becomes: whose values are being taught?

A Corporate Curriculum in Public Schools

AWS has pledged major funding for training, bootcamps, and classroom content. The scale is national: primary and secondary students across Australia will be funneled through AI literacy programs developed with industry partners. Source

Critics worry this is less about education than market capture. If AWS supplies the tools, frameworks, and even certifications, students risk learning AI through a single corporate lens. That’s not neutral knowledge—it’s brand‑building at national scale.

The Missing Piece: Ethics

AI literacy isn’t just coding or software use. It also requires civic and moral reasoning:

Bias & fairness: Who is harmed when algorithms fail?

Surveillance & privacy: Should data‑hungry corporations shape how kids think about information ownership?

Labor displacement: What jobs might vanish—and who bears the risk?

Without explicit, independent ethical frameworks, students may leave these programs seeing AI as an unquestioned good rather than a contested technology with social and political consequences.

Government Policy vs. Corporate Ambition

Australia has lagged in tech education investment, and AWS’s offer fills a real need. But it also exposes a policy vacuum: why is the ethical dimension of AI being left to corporations to interpret? In the race to “future‑proof” the workforce, policymakers risk abdicating responsibility for the civic side of education.

This mirrors a global trend in which private firms become de facto curriculum designers. Governments step back—grateful for investment—but at what long‑term cost?

The Future Classroom

Narrowed perspectives: AI seen mainly as a productivity tool, not a domain with ethical dilemmas.

Vendor lock‑in: Schools become dependent on private infrastructure and certifications.

Cultural shaping: A generation’s first “AI lessons” carry corporate branding and assumptions.

The Takeaway

Teaching one million kids AI is not just a skills program—it’s a cultural intervention. If governments don’t define ethical and civic boundaries for AI education, corporations will do it for them. Australia can produce students fluent in the language of algorithms; the challenge is ensuring they are equally fluent in the values that should guide them.